This is the the 12th blog post in the series about Azure Arc enabled Data Services.

The initial post can be found at Azure Arc for Data Services, part 1 – Intro and the whole collection can be found at Azure Arc enabled Data Services.

In this post I will focus on some of the most common tasks with SQL Managed Instance on Azure Arc enabled Data Services, such as copying & managing data into the containers and a way of importing & exporting your backups.

Note that the list of the operations at this point looks more like a repetition of previous blog post, but besides providing a generic compilation and lookup material, it will be expanded in the nearest future.

As described in the section Find Out The Name Of Your Pod & Container in Azure Arc for Data Services, part 10 – Configuring SQL Managed Instance whatever you do with the individual container of SQL Managed Instance on Azure Arc enabled Data Services, you need to know the exact name of the respective pod and the container, to be able to connect to them.

In my test case, the name of the pod sql20-0 and the name of the pod is arc-sqlmi, given that I have created my SQL Managed Instance with the name sql20.

I notice that the naming for the SQL Managed Instance pods within the Azure Arc enabled Data Services looks with the following pattern:

[sqlMiName-X], where sqlMiName is the name we have chosen for our our SQL Managed Instance and X is the numerical number of the instance (0 in the case of a single instance and 0,1,..,n in the case of using Availability Groups with n replicas (indeed, you need to specify 2 or more replicas to get an Availability Group working).

[arc-sqlmi] is the name that at least for the last 5-6 months is being used for the container with SQL Managed Instance.

Please, please, please – do not take it for granted, because development team can change any of this things at any given day, without any warning – and it is totally fair.

The feature is still not under General Availability and even after it will be, there is no promise that there won’t be a necessity to do a given change. Also, not that potentially one can simply create a SQL MI with the name sql20-0 and it will start colliding with your expectation. Please, be careful and follow the suggested path to determine the exact name of the pod and of the container you are going to work with.

Azure Blob Storage

It might sound funny, but the best way right now, if you have a direct connection to Azure (and the respective ports open in the whole network that connects to it), then the easiest and for me personally, the “bestiest” way to deal with Backups, Restores and hence migrations to SQL Managed Instance is to use Azure Blob Storage.

Using the SQL Server 2016+ path with the SAS Key we can set up our credential that will allow us to write and read to and from the respective Azure Blob Storage (and make sure you limit it to a specific container (let’s pretend there that my blob storage account name is [superazarcsqlmi] and the container name is [arcbackup] and the respective SAS key is correct :)

CREATE CREDENTIAL [arcbackup.blob.core.windows.net/arcbackup] WITH IDENTITY = 'SHARED ACCESS SIGNATURE', SECRET = 'sv=thatbigandrandomstring&someofthe=dates&will=bethere&aswell=asthe';

Just do not forget, that the maximum for a single file with the API supported by any current (March 2021) SQL Server version is 195GB and if you have a reasonably big Database, you will have to do striping to multiple backup files.

Now you can simply create a database test by using the following command on the single instance of SQL Managed Instance:

CREATE DATABASE [TEST];

and then just simply use the backup command

BACKUP DATABASE [TEST] TO URL = "arcbackup.blob.core.windows.net/arcbackup/testbackup_201103.bak" WITH COMPRESSION, MaxTransferSize = 4194304, BlockSize = 65536;

or any of your favourite solutions for that matter.

The restore will look pretty simple, as expected (and notice, that you will have to follow the path indicated Azure Arc for Data Services, part 11 – SQL Managed Instance Availability Group in order to make it work for the Availability Groups):

RESTORE DATABASE [TEST] FROM URL = "arcbackup.blob.core.windows.net/arcbackup/testbackup_201103.bak" WITH REPLACE, STATS = 1,RECOVERY

This way you are a kind of free of the whole hustle with the storage at the Kubernetes & your Infrastructure level.

Copy Backups into and from the SQL Managed Instance container

Sometimes we can’t use Azure Blob Storage (maybe because there is no direct access or maybe because we are not using it at all) and there is a need to deliver a backup from the outside of SQL MI into the container, or maybe extract the local backup – and for that purpose it is good to have the necessary commands at hand.

Let split this category into the following ones:

– Copying local file into the container

– Download file from the container

Copy local file

This operation is very much the same as the copying the custom mssql-custom.conf configuration into the container and can be simply executed with the following command (the pod name is still sql20-0 and the container name is arc-sqlmi:

kubectl cp mytest.bak sql20-0:/var/opt/mssql/data/mssql-custom.conf -c arc-sqlmi -n arc

Notice that it is just a rather simple KUBECTL CP command, and that we are specifying the destination folder, and if you are still using the defaults, without creating and mapping the backups to the /var/opt/mssql/data/backup folder, for example – we are using the same folder where the data & log files are to be found by default.

Download remote file

Should we need to download the file from some internet location, we can simply use the WGET and download the respective file into our container.

Let’s say we select the AdventureWorks2019 backup from the GitHub – (using the following link https://github.com/Microsoft/sql-server-samples/releases/download/adventureworks/AdventureWorks2019.bak) and download it once again into the default data & log folder /var/opt/mssql/data:

kubectl exec -it sql20-0 -c arc-sqlmi -n arc -- wget -P /var/opt/mssql/data https://github.com/Microsoft/sql-server-samples/releases/download/adventureworks/AdventureWorks2019.bak

This is incredibly easy and you do not have to reinvent the wheel.

Run commands on your local SQL Managed Instance

To get the full conscience about the previous command WGET command, we need to understand that we are actually running (executing) commands on the container with the KUBECTL EXEC command and that the same very way can be used in order to use much more power.

For example, we can list the files in the respective folder /var/opt/mssql/data with the good old ls command:

kubectl exec -it sql20-0 -c arc-sqlmi -n arc -- ls /var/opt/mssql/data

The same way can and in the case of Availability Groups on SQL Managed Instance SHOULD be used for working with a particular instance in a remote way, without directly connecting through the external endpoint. We can simply us the pre-installed sqlcmd tool for running commands, such as RESTORE against our SQL Managed Instance, restoring our downloaded database from the local folder:

kubectl exec -it sql20-0 -c arc-sqlmi -n arc -- /opt/mssql-tools/bin/sqlcmd -S localhost -U arcadmin -P ThatsAgOodPa33w0rdTO3em3mbrr -Q "RESTORE DATABASE [AdventureWorks2019] FROM DISK = N'/var/opt/mssql/data/AdventureWorks2019.bak' WITH FILE = 1, MOVE N'AdventureWorks2017' TO N'/var/opt/mssql/data/AdventureWorks2019.mdf', MOVE N'AdventureWorks2017_log' TO N'/var/opt/mssql/data/AdventureWorks2019_log.ldf', NOUNLOAD, REPLACE, STATS = 1"

In the end we pretty much can run any command, by simply opening the bash command prompt within our container:

kubectl exec -it sql20-0 -c arc-sqlmi -n arc -- /bin/bash

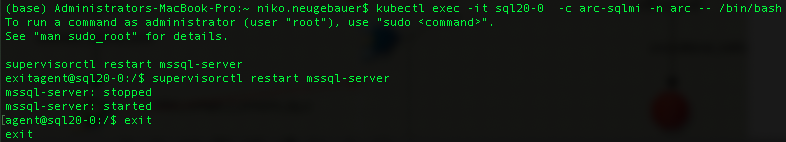

We can go as far as even restart SQL Managed Instance by opening the bash and forcing the supervisorctl to restart our service:

kubectl exec -it sql20-0 -c arc-sqlmi -n arc -- /bin/bash supervisorctl restart mssql-server exit

Your needs and your imaginations are the keys – wether you need to create a new configuration with mssql-conf or use any other habitual os-specific Linux tools, as long as you have them on your container (or you install them there) – you are free to advance with your operation.

Azure Data Studio

You can already do a couple of operations in the Azure Data Studio, but it is looks pretty in the early stage and anything before the General Availability of Azure Arc should be considered as beta, in my opinion.

Final Thoughts

The integration & migration stories of Azure Arc enabled Data Services SQL Managed Instance are … well … far from being complete.

I won’t go in to all the details of the services that I expect to be delivered in order for this service to become a first-class citizen of the Azure PaaS, but

– Having a full backup retention service to Azure Blob Storage (like after 7 days, of local backups copy them into this Azure Blob Storage account automagically)

– Migration path for online migration (starting with restore of the differential & log backups for SQL MI in the High Availability Mode) and support for transactional replication (as destination) and of course Azure Migration Service support

– Easy management for the most common operations (I would love to have a rather simple Azure AZDATA CLI for copying and downloading data in/to containers, plus an easier restart would be an advantage) – I think it is unfair to expect an average DBA to be able to run Kubernetes commands with ease (right now, it will be different in 5 years for sure)

are still missing in action.

– Automated patching & Upgrade (yeah, a huge topic)

This does not change a bit of appreciation of this revolutionary attempt, but the expectations are definitely high, given that the team themselves announced that some of the above listed features are coming.

to be continued with Azure Arc enabled Data Services, part 13 – Monitoring with Grafana & Kibana

Everything I’ve read about Arc (including this series) is that database management is taking a big step backwards today – becoming more difficult, less reliable, more breaking points, less documentation, few job applicants familiar with any of the technologies involved – in exchange for possibly taking a huge step forward a few years from now.

SQL Server became popular in part because it had the point-and-click ease-of-use of Windows. Any sysadmin could get it up and running quickly, even if they weren’t doing a good job of it, because it didn’t require any specialized knowledge at first. I read these posts and say to myself, “Wow, I feel sorry for someone who tries to get this up and running quickly in 2021.”

I’m really glad you’re writing about it, though – it’s neat to see how management works. It reminds me a lot of columnstore back in 2012: it was horrible when it shipped, but the promise was there, and Microsoft put in the work to make it usable for more people. Now we’ll see if they do that same investment in Arc.

You are absolutely correct, sir!

This feature has a revolutionary feel, but it is quite far away from being an enterprise-grade citizen (2-3 years period, in my estimation).

The thing is that it is totally goes into the right direction strategically and it can potentially change the DB world in less than a decade.

In parallel, Microsoft is churning new versions for SQL Server on Linux and already got some mysterious High Availability implementation for K8s.

Once Kubernetes becomes a regular member of house of System Administrator (and by what I see with current clients – it does), there won’t be an easy path back.

And yes, I would be totally sorry for a regular SysAdmin trying to make things work right away as they are at the moment.