Continuation from the previous 35 parts, starting from https://www.nikoport.com/2013/07/05/clustered-columnstore-indexes-part-1-intro/

At the 12th Edition of SQLBits around 1 week ago I have had a short but interesting conversation about Columnstore Indexes, its sizes and its importance for rebuild processes with Ola Hallengren.

I have asked Ola to include some specific functionality into his Maintenance Solution regarding Columnstore Indexes, since there is absolutely no reason to do the same type of maintenance for them as it is being done for the RowStore.

Consider the 2 principal functions for table maintenance in SQL Server, Rebuild & Reorganize:

Reorganize

– for RowStore we are basically shifting positions of different data pages (8Kb) in order to lower a little bit defragmentation (lack of the sorted order) caused by the inserts & updates.

– for Columnstore we are invoking Tuple Mover which in this case has 2 known functionalities:

1) the default invocation causes it to look for closed Delta-Stores and compress them turning into Segments;

2) invocation with a hint (COMPRESS_ALL_ROW_GROUPS = ON) makes Tuple Mover additionally look for any open Delta-Stores, close them immediately and then compress them into Segments.

Rebuild

– for RowStore we use it to get the nice ordering for our Indexes, to reclaim some unused space, etc. When rebuilding, we are getting all of our data sorted for our B-trees and compressed, if we are using one of the available compression algorithms (ROW,PAGE)

– for Columnstore we use this function in order to reshuffle all the data between existing Row Groups (trying to get the best compression possible), and to then the compression algorithm does its best guesses for getting the best compression. The ordering of data is not guaranteed nor intended.

We are also reclaiming some space, should we have any rows deleted or updated (Marked in our Deleted Bitmap).

When to Rebuild and when to Reorganize Columnstore

The big question is how to decide when it is the right time to execute the maintenance and rebuild the Columnstore.

What kind of metrics should be used in order to advance with this decision ?

In my opinion the percentage of the Deleted Rows regarding to the total Number of Rows in the Columnstore might be a relevant metrics to consider.

There are a number of details here, like for example if we have 2 Million Rows spread over 2 Segments and at the same time 1 Million Rows marked in a Deleted Bitmap.

A very important issue in this case is if the Deleted Rows belong to just one Row Group or to the multiple ones, because our Segment Elimination should simply ignore this specific Row Group without touching a bit on the disk – Query Optimiser should be able to determine this and simply ignore it. Right now it is not the case, as I blogged in part 34, Deleted Segment Elimination.

We can still decide to rebuild our table even if we have 50% of each of our Segments deleted, but a simple single metric showing us just 1 number might not tell the whole story behind it.

The Catch

Let us try to list the possible metrics in the game:

– Number of Deleted Segments (Segments where all respective data has been marked as deleted)

– Segment Fragmentation Percentage in a Partition/Table

But we need to define the fragmentation for Columnstore Indexes –

in my opinion, since there is no natural ordering for the data inside compressed Segments, any rows marked as Deleted are introducing Columnstore fragmentation.

Notice, that I am definitely considering the Segments, because in the most cases they are definitely representing the vast majority of the Columnstore table structure. While Delta-Stores are using B-Tree, they are also unsorted because internally they are represented as HEAPs, plus any data inside the Delta-Stores can be easily deleted from them without any potential issues plugging the Segments.

In SQL Server 2014 the default behaviour for Tuple Mover is to run automatically every 5 minutes and unless you disable it with a Trace Flag 634 as described in Trace Flags & Query Optimiser Rules blogpost, you are absolutely fine already doing Reorganize on a regular schedule.

And so we can define the following fragmentation types:

1) Segment Fragmentation – as a percentage of the deleted rows relatively to the total number of rows of one particular compressed Segment

2) Size on Disk Fragmentation – as a percentage of the Columnstore index size, since every segment might be radically different to each other in the terms of the size.

Important issue here is the decision about the inclusion/exclusion of the completely deleted Segments into/out of the Fragmentation statistics, I consider it to be a complementary metrics in the case of the current SQL Server 2014 (RTM-CU2), which is a integral part of the total equation.

Another important note goes to the fact that even though we are dealing with different Segments for each of the columns, since every row is is spread between all Segments – their destiny of being active or being deleted is perfectly shared between all of the columns.

The Decision Flow

Thousands of blog posts, whitepapers & books have been written on the topic of when you should Reorganise and when to Rebuild a RowStore table, with typical values suggesting to effectuate a maintenance operation to my knowledge rounding 10% for the Reorganize and reaching 30% of the fragmentation means that it is a time to consider to rebuild the whole Table/Partition.

In real life, everyone have their own numbers which alter depending on the database(s) and server(s) in question.

My suggestion by default is to set your table/partition for a Rebuild operation when:

30% of the total fragmentation for the table/partition in question has been reached.

10% of the completely deleted Segments found in our Columnstore Table or Partition.

This recommendation serves of course until Microsoft implements a maintenance solution for removing and merging Columnstore Segments, because should it happen – the game would change & improve greatly.

Please vote up on the Columnstore Segments Maintenance – Remove & Merge Connect Item proposing this solution.

So let us try to create some queries which will allow us to identify the current situation of my database:

I have used a copy of ContosoRetailDW database, where I have created Clustered Columnstore Indexes for 4 of the tables: FactOnlineSales, FactSales, FactInventory and FactSalesQuota.

alter table dbo.[FactOnlineSales] DROP CONSTRAINT PK_FactOnlineSales_SalesKey

alter table dbo.[FactStrategyPlan] DROP CONSTRAINT PK_FactStrategyPlan_StrategyPlanKey

alter table dbo.[FactSales] DROP CONSTRAINT PK_FactSales_SalesKey

alter table dbo.[FactInventory] DROP CONSTRAINT PK_FactInventory_InventoryKey

alter table dbo.[FactSalesQuota] DROP CONSTRAINT PK_FactSalesQuota_SalesQuotaKey

alter table dbo.[FactOnlineSales] DROP CONSTRAINT FK_FactOnlineSales_DimCurrency

alter table dbo.[FactOnlineSales] DROP CONSTRAINT FK_FactOnlineSales_DimCustomer

alter table dbo.[FactOnlineSales] DROP CONSTRAINT FK_FactOnlineSales_DimDate

alter table dbo.[FactOnlineSales] DROP CONSTRAINT FK_FactOnlineSales_DimProduct

alter table dbo.[FactOnlineSales] DROP CONSTRAINT FK_FactOnlineSales_DimPromotion

alter table dbo.[FactOnlineSales] DROP CONSTRAINT FK_FactOnlineSales_DimStore

alter table dbo.[FactStrategyPlan] DROP CONSTRAINT FK_FactStrategyPlan_DimAccount

alter table dbo.[FactStrategyPlan] DROP CONSTRAINT FK_FactStrategyPlan_DimCurrency

alter table dbo.[FactStrategyPlan] DROP CONSTRAINT FK_FactStrategyPlan_DimDate

alter table dbo.[FactStrategyPlan] DROP CONSTRAINT FK_FactStrategyPlan_DimEntity

alter table dbo.[FactStrategyPlan] DROP CONSTRAINT FK_FactStrategyPlan_DimProductCategory

alter table dbo.[FactStrategyPlan] DROP CONSTRAINT FK_FactStrategyPlan_DimScenario

alter table dbo.[FactSales] DROP CONSTRAINT FK_FactSales_DimChannel

alter table dbo.[FactSales] DROP CONSTRAINT FK_FactSales_DimCurrency

alter table dbo.[FactSales] DROP CONSTRAINT FK_FactSales_DimDate

alter table dbo.[FactSales] DROP CONSTRAINT FK_FactSales_DimProduct

alter table dbo.[FactSales] DROP CONSTRAINT FK_FactSales_DimPromotion

alter table dbo.[FactSales] DROP CONSTRAINT FK_FactSales_DimStore

alter table dbo.[FactInventory] DROP CONSTRAINT FK_FactInventory_DimCurrency

alter table dbo.[FactInventory] DROP CONSTRAINT FK_FactInventory_DimDate

alter table dbo.[FactInventory] DROP CONSTRAINT FK_FactInventory_DimProduct

alter table dbo.[FactInventory] DROP CONSTRAINT FK_FactInventory_DimStore

alter table dbo.[FactSalesQuota] DROP CONSTRAINT FK_FactSalesQuota_DimChannel

alter table dbo.[FactSalesQuota] DROP CONSTRAINT FK_FactSalesQuota_DimCurrency

alter table dbo.[FactSalesQuota] DROP CONSTRAINT FK_FactSalesQuota_DimDate

alter table dbo.[FactSalesQuota] DROP CONSTRAINT FK_FactSalesQuota_DimProduct

alter table dbo.[FactSalesQuota] DROP CONSTRAINT FK_FactSalesQuota_DimScenario

alter table dbo.[FactSalesQuota] DROP CONSTRAINT FK_FactSalesQuota_DimStore;

GO

Create Clustered Columnstore Index PK_FactOnlineSales

on dbo.FactOnlineSales;

Create Clustered Columnstore index PK_FactSales

on dbo.FactSales;

Create Clustered Columnstore index PK_FactInventory

on dbo.FactInventory;

Create Clustered Columnstore index PK_FactSalesQuota

on dbo.FactSalesQuota;

I have than deleted some random numbers of rows across different tables, and now trying to identify if I should rebuild them or not (Updated on 27th of January 2015, after reminder from David Barbarin):

SELECT object_name(p.object_id) as TableName, p.partition_number as Partition, cast( Avg( (rg.deleted_rows * 1. / rg.total_rows) * 100 ) as Decimal(5,2)) as 'Total Fragmentation (Percentage)', sum (case rg.deleted_rows when rg.total_rows then 1 else 0 end ) as 'Deleted Segments Count', cast( (sum (case rg.deleted_rows when rg.total_rows then 1 else 0 end ) * 1. / count(*)) * 100 as Decimal(5,2)) as 'DeletedSegments (Percentage)' FROM sys.partitions AS p INNER JOIN sys.column_store_row_groups rg ON p.object_id = rg.object_id where rg.state = 3 -- Compressed (Ignoring: 0 - Hidden, 1 - Open, 2 - Closed, 4 - Tombstone) group by p.object_id, p.partition_number order by object_name(p.object_id);

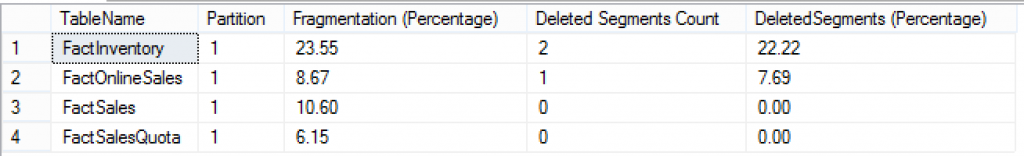

As you can see from my 4 Tables I have different percentages for the fragmentation spread over just 1 partition – I can clearly see that 2 of my tables have a fragmentation above 10% but at the same time only for 1 Table do I have Deleted Segments, while there is another table where we have a lower percentage of fragmentation but already 1 Deleted Segment.

As you can see from my 4 Tables I have different percentages for the fragmentation spread over just 1 partition – I can clearly see that 2 of my tables have a fragmentation above 10% but at the same time only for 1 Table do I have Deleted Segments, while there is another table where we have a lower percentage of fragmentation but already 1 Deleted Segment.

Notice, that the fragmentation percentage of the deleted segments can never be higher than the total fragmentation of the Table/Partition.

For me the FactInventory table should be rebuild even though it did not reached the total fragmentation Threshold of 30%, but because it it has reached over 20% of the total Deleted Segments.

Fragmentation based on the size of the data

I wish there would be a way to calculate the total size of the data that is deleted, but since Deleted Bitmap is quite a kind of a unicorn – as far as it is know, there is no way to determine it’s exact size, and than of course we can only estimate the size of the deleted data, which is compressed in a number of ways making it impossible to get an exact number.

Also the calculation of the total size would have to be spread against all of the columns and in DWH this might be hundreds of them.

I might revisit this blog post in the future with a solution, but at the moment I do not see a real necessity of calculating the value in bits & bytes.

to be continued with Clustered Columnstore Indexes – part 37 (“Deleted Bitmap & Delta-Store Sizes”)

And of course “AdaptiveIndexDefrag” (http://blogs.msdn.com/b/blogdoezequiel/archive/2011/07/03/adaptive-index-defrag.aspx) has support for Columnstore indexes since its inception…

What kind of support for Columnstore “AdaptiveIndexDefrag” does have ?

Can I define the percentage or the number of Segments per partition that should kick-off the rebuild process ?

Also since Columnstore Reorganize is not comparable with Rowstore Reorganize, what kind of param can be used to specify that I do not want it at all ?

Hi Niko,

I’m working on this now and your article is good but I also found one quite good about how to know when to rebuild a columnstore index. If you are interested here is the link: http://blogs.msdn.com/b/sqlcat/archive/2015/07/08/sql-2014-clustered-columnstore-index-rebuild-and-maintenance-considerations.aspx

All the best and keep up the good work.

Cheers,

Vitor

Hi Vitor,

yes I know of what Denzil wrote. Denzil is an extremely smart guy, he works in SQLCAT and he collaborates with Sunil (Columnstore PM) and Dev team directly, so everyone should pay attention whenever he blogs.

This particular article was written 1 year before Denzil’s, and the whole idea of it was to give my friend Ola some basis to include in his maintenance solution. :)

Since this article I have published further articles on how people should write the maintenance solution and which aspects are truly important. You should check out the following articles:

http://www.nikoport.com/2015/06/28/columnstore-indexes-part-57-segment-alignment-maintenance/

http://www.nikoport.com/2015/08/12/columnstore-indexes-part-62-row-groups-trimming/

My maintenance solution is still in work and will be released hopefully before the end of this year.

I think it will be fantastic, because it touches on so many different aspects of the Columnstore Indexes, plus it is able to learn from the results, where my algorithms are based on what I see in the real world, where pressures raising well before the official limit.

It will be included as a part of CISL – https://github.com/NikoNeugebauer/CISL

Best regards,

Niko

Thank you, Niko.

I can see that you still very well connected ;)

I’ve tested Ola’s script but still prefer Denzil’s. Will adapt it for our reality but there will be very few changes. Anyway, will continue following you to see if you come with a better maintenance solution.

Any chance of you coming to Switzerland, soon? If so, don’t forget to tell me so you can pay me a lunch :)

Cheers,

Vitor

Hey Vitor,

Well-connected ? You are exaggerating! :)

Ola has not implemented any Columnstore support yet, so yeah – you should use Denzil’s Script. That is the best of what I have seen on the market now.

I will let you know when my solution is ready. You can also sign up for notifications here: http://oh22.us10.list-manage.com/subscribe?u=c81f19d500be5688f15031262&id=b91bff2e44

Best regards,

Niko

Hi Niko,

Is there any news on when your maintenance solution might be ready? I’d be really interested to use it.

Thanks,

Adrian

Hi Adrian,

it was released in January 2016 as a part of CISL, currently fully supporting 2014 – https://github.com/NikoNeugebauer/CISL

The SQL Server 2016 support is still in the beta, I hope it to be released in the next days/weeks.

Best regards,

Niko Neugebauer

Thanks Niko.

Is the maintenance solution AlwaysOn aware?

Thanks,

Adrian

Hi Adrian,

since AlwaysOn Availability Groups do not support Clustered Columnstore for Readable Secondaries in SQL Server 2014, I never had any need for implementing any support.

Do you see it different ?

Best regards,

Niko

Hi Niko,

Agreed AlwaysOn doesn’t support Clustered Columnstore for Readable Secondaries. But in this scenario you only want to perform index maintenance on your primary replica so you need a way to determine this similar to Ola Hallengren’s scripts. Rather than having to disable and enable jobs each time you failover.

Also I believe in 2016 AlwaysOn does support Clustered Columnstore for Readable Secondaries so will this be fixed for then?

Thanks,

Adrian

Hi Adrian,

yes, in the long term there will be a support for AlwaysOn for 2016.

I can’t go into any concrete plans right now, since I am still preparing the current 2016 features,

but if you want to contribute with some code to make it faster, I believe everyone will benefit from it.

I will include the disabling of the secondary replica execution for 2014 in the next release – thank you for the idea!

Best regards,

Niko Neugebauer

Hi Adrian,

just to add that a newest version of Maintenance Solution at CISL is already supporting the AG now:

https://github.com/NikoNeugebauer/CISL/blob/master/SQL-2014/StoredProcs/cstore_doMaintenance.sql

Best regards,

Niko Neugebauer

Hi Niko,

Thanks for the maintenance solution. Any idea why code for temp tables is included as part of stored procedures? Can’t we just run the stored procedures in tempdb? I noticed that SPs are taking longer time as they are trying to analyze objects in tempdb. I think this is a redundant process when running the maintenance on multiple databases.

Thanks.

Hi Praveen,

I guess the origin of those decisions are hidden 10+ years ago behind the strategy between SQL Server 2005 – 2008 releases.

Having Azure SQL Database as the principal driver for Microsoft in the past decade, the context is always within a single Database …

Best regards,

Niko Neugebauer