Recently I have made some very interesting discoveries about Hekaton (aka In-Memory OLTP) that I felt absolutely necessary to share with the world.

I assume that the most people already know about this specifics, but discovering these details has helped me to shape my own opinion about the current state of the In-Memory in SQL Server & the Azure SQL Database. Especially it was helpful to understand how good and useful it can be for the ETL processes – since the general understanding is that for temp tables and for the staging tables – the In-Memory technology can be very effective.

These tests are based on the real-life experiences that I was running at a real client, trying to improve their ETL process.

I have decided to run the tests for the following scenarios (which of course do not represent the whole available spectre, but they do represent the most important ones, at least in my imagination).

These are the scenarios that I took under the scope in this blog post:

1) Writing into an In-Memory table

2) Reading data into a disk-based table from an In-Memory table

This sounds like a rather simple exercise, right ? … Right ?

Looking back at the original release of the Hekaton of the SQL Server 2014, there were absolutely no parallelism supported for the in-memory tables, but how the things are looking in SQL Server 2016 ?

With the introduction of the Columnstore Indexes for the In-Memory tables, I thought that the parallelism was introduced, and all the mentions of the limitations of the Natively Compiled Stored Procedures of using just 1 core was relevant because the other accesses of the In-Memory Tables can be parallelised.

The Setup

What test database to test this features to take, besides the good old favourite one – ContosoRetailDW, a free database from Microsoft.

Let us restore a fresh new copy on the instance of the SQL Server 2016 from the backup that is located at C:\Instance

USE [master]

alter database ContosoRetailDW

set SINGLE_USER WITH ROLLBACK IMMEDIATE;

RESTORE DATABASE [ContosoRetailDW]

FROM DISK = N'C:\Install\ContosoRetailDW.bak' WITH FILE = 1,

MOVE N'ContosoRetailDW2.0' TO N'C:\Data\ContosoRetailDW.mdf',

MOVE N'ContosoRetailDW2.0_log' TO N'C:\Data\ContosoRetailDW.ldf',

NOUNLOAD, STATS = 5;

alter database ContosoRetailDW

set MULTI_USER;

GO

GO

ALTER DATABASE [ContosoRetailDW] SET COMPATIBILITY_LEVEL = 140

GO

ALTER DATABASE [ContosoRetailDW] MODIFY FILE ( NAME = N'ContosoRetailDW2.0', SIZE = 2000000KB , FILEGROWTH = 128000KB )

GO

ALTER DATABASE [ContosoRetailDW] MODIFY FILE ( NAME = N'ContosoRetailDW2.0_log', SIZE = 400000KB , FILEGROWTH = 256000KB )

GO

We need to convert the FactOnlineSales table from the RowStore to the Columnstore, in order to get the data faster where it is needed :)

use ContosoRetailDW; GO ALTER TABLE dbo.[FactOnlineSales] DROP CONSTRAINT [PK_FactOnlineSales_SalesKey]; create clustered columnstore Index PK_FactOnlineSales on dbo.FactOnlineSales;

We shall add a Memory Optimised File Group to our table, so we can start using the In-Memory tables:

USE master; ALTER DATABASE [ContosoRetailDW] ADD FILEGROUP [ContosoRetailDW_Hekaton] CONTAINS MEMORY_OPTIMIZED_DATA GO ALTER DATABASE [ContosoRetailDW] ADD FILE(NAME = ContosoRetailDW_HekatonDir, FILENAME = 'C:\Data\xtp') TO FILEGROUP [ContosoRetailDW_Hekaton]; GO

finally arriving to the step of creating the empty staging tables for the tests:

FactOnlineSales_Staging – the InMemory SchemaOnly table

FactOnlineSales_DiskStaging – the Disk-Based staging table,

both equipped with a Nonclustered Index, serving as a Primary Key:

use ContosoRetailDW; DROP TABLE IF EXISTS [dbo].[FactOnlineSales_Staging]; DROP TABLE IF EXISTS [dbo].[FactOnlineSales_DiskStaging]; CREATE TABLE [dbo].[FactOnlineSales_Staging]( [OnlineSalesKey] [int] NOT NULL, [DateKey] [datetime] NOT NULL, [StoreKey] [int] NOT NULL, [ProductKey] [int] NOT NULL, [PromotionKey] [int] NOT NULL, [CurrencyKey] [int] NOT NULL, [CustomerKey] [int] NOT NULL, [SalesOrderNumber] [nvarchar](20) NOT NULL, [SalesOrderLineNumber] [int] NULL, [SalesQuantity] [int] NOT NULL, [SalesAmount] [money] NOT NULL, [ReturnQuantity] [int] NOT NULL, [ReturnAmount] [money] NULL, [DiscountQuantity] [int] NULL, [DiscountAmount] [money] NULL, [TotalCost] [money] NOT NULL, [UnitCost] [money] NULL, [UnitPrice] [money] NULL, [ETLLoadID] [int] NULL, [LoadDate] [datetime] NULL, [UpdateDate] [datetime] NULL, Constraint PK_FactOnlineSales_Staging PRIMARY KEY NONCLUSTERED ([OnlineSalesKey]) ) WITH (MEMORY_OPTIMIZED = ON, DURABILITY = SCHEMA_ONLY); CREATE TABLE [dbo].[FactOnlineSales_DiskStaging]( [OnlineSalesKey] [int] NOT NULL, [DateKey] [datetime] NOT NULL, [StoreKey] [int] NOT NULL, [ProductKey] [int] NOT NULL, [PromotionKey] [int] NOT NULL, [CurrencyKey] [int] NOT NULL, [CustomerKey] [int] NOT NULL, [SalesOrderNumber] [nvarchar](20) NOT NULL, [SalesOrderLineNumber] [int] NULL, [SalesQuantity] [int] NOT NULL, [SalesAmount] [money] NOT NULL, [ReturnQuantity] [int] NOT NULL, [ReturnAmount] [money] NULL, [DiscountQuantity] [int] NULL, [DiscountAmount] [money] NULL, [TotalCost] [money] NOT NULL, [UnitCost] [money] NULL, [UnitPrice] [money] NULL, [ETLLoadID] [int] NULL, [LoadDate] [datetime] NULL, [UpdateDate] [datetime] NULL, Constraint PK_FactOnlineSales_DiskStaging PRIMARY KEY NONCLUSTERED ([OnlineSalesKey]) );

Writing into an In-Memory table

There is nothing easier to do than writing into an In-Memory table – so I thought, and armed with my beliefs I fired up the following script, loading 2 Million Rows into my In-Memory Non-Persisted table:

INSERT INTO dbo.FactOnlineSales_Staging SELECT TOP 2000000 * from dbo.FactOnlineSales;

Here is a fine actual execution plan that I have received from my test query:

a generous single-threaded plan, that brought me into a very dubious state of mind: I have set the threshold for parallelism to 5.0, and the Estimated Query Cost was actually 5.11, meaning that the Query Optimiser should have tried to make this plan go parallel, right ?

There might have been some reasons why it did not took place, but let us believe that the Query Optimiser has tried to make it work.

While I understand the serial insertion into the In-Memory table, I have difficulties understanding the part of the Columnstore data extraction, which should have perfectly gone into parallel state of mind.

The answer is hidden in the XML of the actual execution plan, and since the PASS Summit 2017, when the ever-awesome Kevin Farlee recommended me to always look for the answers in the XML, I religiously follow his advice ;)

NonParallelPlanReason=”NoParallelForDmlOnMemoryOptimizedTable” – SAY WHAT!? How on earth ??? Oh well, the In-Memory seems to dislike the fast ingestion of the data, not only functioning in the single-threaded fashion for the data insertion, but forcibly refusing to allow any parallel data processing of the source data.

This makes the usage of the In-Memory tables in so many scenarios ETL absolutely and incredibly unviable and undesirable.

No other words or emotions here. Unviable and Undesirable, because at the moment when your data will get big (and think about all those promises of ZB Zeta-Bytes that we are keep on hearing in the last decade), you will need multiple cores – this is a year 2018, for the goodness sake!

Oh, and if you believe that a persisted (SCHEMA_AND_DATA) tables are any different in the processing of the parallelism or increasing the speed of the data landing – I welcome you to try to prove that. :)

Reading from the In-Memory table

Armed with the latest available Cumulative Update for SQL Server 2016 – the CU7 for SQL Server with Service Pack 1 (build 4466), I have advanced with the same tests as for the loading data into the In-Memory table, but this time I simply read those 2 million rows from the In-Memory table:

insert into dbo.FactOnlineSales_DiskStaging select top 2000000 * from dbo.FactOnlineSales_Staging;

The actual execution plan looks painfully single-threaded and slow, if you know what I mean:

The Estimated Query Cost was actually 318.54, meaning that there all reasons to go parallel in that query, but hey I can accept the challenge !

In SQL Server 2016 we can force the parallel insertion into the destination table, which sometimes influences the parallelism of the whole execution plan, and for this purpose all we need to do is to add the WITH (TABLOCK) hint

-- Empty the table TRUNCATE TABLE dbo.FactOnlineSales_DiskStaging; insert into dbo.FactOnlineSales_DiskStaging WITH (TABLOCK) select top 2000000 * from dbo.FactOnlineSales_Staging;

There will be no indication of the non-parallel reasons in the actual execution plan this time, but the actual the execution plan itself will remain single-threaded as before.

Actually, the EstimatedAvailableDegreeOfParallelism will be set to 2: EstimatedAvailableDegreeOfParallelism=”2″

but the actual number of used cores will be just 1, making me sad, and thinking how it could be in the modern development that the parallelism was not one of the priorities :(

I thought, hey – I won’t give up that easily and tried the following combination, of using the SELECT INSERT command that was parallelised way before SQL Server 2016:

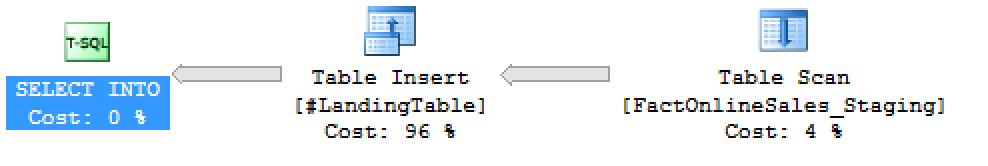

DROP TABLE IF EXISTS #LandingTable; SELECT * INTO #LandingTable FROM dbo.FactOnlineSales_Staging;

Surprise:

The “good” same reasons for the lack of parallelism is here, in the actual execution plan:

NonParallelPlanReason=”NoParallelForDmlOnMemoryOptimizedTable”!

This is again sad, very very sad.

In the search for the answers, I have stumbled upon the following Knowledge Base article from Microsoft: Update enables DML query plan to scan query memory-optimized tables in parallel in SQL Server 2016, which made my heart beat faster – maybe I can parallelise those queries reading from the In-Memory tables after all ?

Armed with the latest update, my SQL Server instance was ready for action and the only thing were to ensure:

– 130 compatibility level

– Enabling SQL Server query optimizer hotfixes, with the possible solutions through:

– Activating Trace Flag 4199

OR

– Activating Trace Flag 9939 (specific for this fix)

OR

– Activating Query Optimiser Hotfixes on the database level, through the Database Scoped Configuration.

Easy-peasy, lemon-squeezy:

use master; GO ALTER DATABASE [ContosoRetailDW] SET COMPATIBILITY_LEVEL = 130 GO USE [ContosoRetailDW] GO ALTER DATABASE SCOPED CONFIGURATION SET QUERY_OPTIMIZER_HOTFIXES = ON; GO

Let’s retry the 2 previous statements, the most useful one:

-- Empty the table TRUNCATE TABLE dbo.FactOnlineSales_DiskStaging; insert into dbo.FactOnlineSales_DiskStaging WITH (TABLOCK) select top 2000000 * from dbo.FactOnlineSales_Staging;

The same old execution plan as before. Those actions had no influences here.

This leaved me extremely upset and unsettled, but I had one more scenario to try out – the SELECT INTO one:

DROP TABLE IF EXISTS #LandingTable; SELECT * INTO #LandingTable FROM dbo.FactOnlineSales_Staging;

This time, I am happy to share a BOOMing experience of the parallel execution plan, both with parallel reading as well as the parallel insertion of the data:

Finally, with the help of the fix, that requires you to run at least

Cumulative Update 5 for SQL Server 2016 or Cumulative Update 3 for SQL Server 2016 SP1, you can get the parallel execution plan for the SELECT INSERT queries, involving Hekaton tables on the reading side of the query.

The trick to make the whole plan function in parallelism of the staging table is to make it a HEAP with no Primary Key, to avoid the TOP keyword when reading data from the In-Memory tables and of course to use the TABLOCK on it:

DROP TABLE IF EXISTS [dbo].[FactOnlineSales_DiskStaging]; CREATE TABLE [dbo].[FactOnlineSales_DiskStaging]( [OnlineSalesKey] [int] NOT NULL, [DateKey] [datetime] NOT NULL, [StoreKey] [int] NOT NULL, [ProductKey] [int] NOT NULL, [PromotionKey] [int] NOT NULL, [CurrencyKey] [int] NOT NULL, [CustomerKey] [int] NOT NULL, [SalesOrderNumber] [nvarchar](20) NOT NULL, [SalesOrderLineNumber] [int] NULL, [SalesQuantity] [int] NOT NULL, [SalesAmount] [money] NOT NULL, [ReturnQuantity] [int] NOT NULL, [ReturnAmount] [money] NULL, [DiscountQuantity] [int] NULL, [DiscountAmount] [money] NULL, [TotalCost] [money] NOT NULL, [UnitCost] [money] NULL, [UnitPrice] [money] NULL, [ETLLoadID] [int] NULL, [LoadDate] [datetime] NULL, [UpdateDate] [datetime] NULL ); insert into dbo.FactOnlineSales_DiskStaging WITH (TABLOCK) select * from dbo.FactOnlineSales_Staging

Look now:

We have a nice parallel execution plan, allowing us to dream about high-performing queries, extracting data from the In-Memory tables… If only we could load data in a parallel fashion into the In-Memory tables …

Final Thoughts

I am a kind of upset and disturbed by this discoveries.

Any serious Data Warehouse specialist cares about parallelism with a great attention, knowing that single-threaded queries against big tables (large millions) most usually mean the slow and painful alarm of the unfinished ETL process, which should be corrected as soon as possible.

The support for the parallelism through the fix, requiring the Query Optimiser Fixes to be enabled, or at least the Trace Flag 9939 (the smallest evil out of those 3 for me) is something that will prevent some of the customers to apply it.

I do understand that the Hekaton is the In-Memory OLTP, but it needs to involve a bit more to become a true In-Memory solution for the SQL Server and the Azure SQL Database, and for that purpose, the ETL part really requires parallelism for the Writing into the In-Memory tables … Especially in the case of the non-persistent ones.

Nothing has changed regarding the parallelism of the data loading from/into the In-Memory tables in the SQL Server 2017,

and this area needs urgent improvements for the future, if it still wants to remain viable for the ETL in the Data Warehousing area.

Hopefully this blog post is to updated in some not too distant future …

Niko thank you for this excellent post, i have been trying to figure out why an ETL query has been under performing on a temp table insert for a few days. I could see the plan was serial but could not figure out why as it had breached the cost threshold for parallelism.

It had been taking two hours for this insert, while on other more powerful instances the query goes through quickly but still with a serial plan.

Some of the source tables are in memory after reading this post i enabled the trace flag as a test and the plan went parallel immediately. Query completed in 2 mins.

This is a massive point for me, we utilize in memory for our ETL processes this limitation and the fix is a substantial performance impact. I’m sure other users have been affected.

Hi Jonathan,

thank you for the kind words. I hope that there will improvements in the next versions of the product – the In-Memory while has a huge potential, still have a good road to walk in order to reach the modern parallel processing status.

Best regards,

Niko

Good post, but bad news. I have been trying to test replacing temporary tables (where temp database is on ram disk) and in-memory tables as described here (https://docs.microsoft.com/en-us/sql/relational-databases/in-memory-oltp/faster-temp-table-and-table-variable-by-using-memory-optimization?view=sql-server-2017).

I am wondering will be any benefits of doing the above, but unfortunately on my test environment where no ram disk is involved, the temporary table beats in-memory one, because of lack of parallel operations of the latter.

Do you have any news if this behavior is changed in SQL Server 2017 or SQL Server 2019?

Regards,

Georgi

Hi Niko,

Respect for the work you have done. Do you have any internal news on if and when we can expect parallel insert into in memory OLTP?

Best Regards,

Georgi

Hi Georgi,

no idea … I do not expect it to change any time soon, unfortunately.

Best regards,

Niko

Hi Niko,

Thanks for the answer. For now, what I can do is to hope to see a change in SQL Server 2019 :-)

Best regards,

Georgi