Continuation from the previous 112 parts, the whole series can be found at https://www.nikoport.com/columnstore/.

This blog post serves as the continuation of the original blog post on the Row Group Merge functionalities that I have described in Columnstore Indexes – part 74 (“Row Group Merging & Cleanup, SQL Server 2016 edition”). I am sure that I am unable to describe & explain all possible situations where Row Group Merge process faces difficulties or works in a different way than one would expect, but I will try to explain at least a couple of situations that might serve as a guide for better understanding of the process limitations.

First of all, let us step back and take a look at the evil enemies of the Columnstore Indexes – and there are 2 main offenders: the memory pressure and the dictionary pressure. Both of them have an important influence on the performance of the Columnstore Indexes creation (Clustered & Nonclustered ones) and naturally as the consequence the overall performance of the queries running against the Columnstore Indexes. Both of these pressures resolve into something that is called Row Group Trimming, meaning that the compressed Row Groups will not be able to reach the perfect state of 1.048.756 rows (a little bit over a million rows), but they will be trimmed (cut) before, thus creating row groups with 500.000 or even some times 10.000 rows – yes, I have seen enough of those smaller ones on the real production sites).

Let us focus on those pressures one by one and see how they behave when we are trying to merge the Row Groups with ALTER INDEX … REORGANIZE command.

Memory Pressure

I decided to start with the Memory Pressure because in my books typically it is less evil or it can be battled/resolved through the traditional methods of increasing the overall amount of memory in the server or by manipulating the Resource Governor settings by defining a new Resource Group with more memory to be granted and then by running it for the user doing the maintenance proceedings for the Columnstore Indexes (if you are a lucky owner of the Enterprise Edition of the SQL Server).

To set things up, I will create a new specific user that I will call NikoSlow, which will be connecting to the test SQL Server 2016 SP1, under the following circumstances: From the 6 GB of available RAM to the SQL Server instance, I will allow only 5% of the Memory Grant, while cutting down to the 20% of the Maximum Memory available for the configured Resource Pool, as you can see on the picture. I have also set up a classifier function for the Resource Governor, that associates the user “NikoSlow” with the Workload Group “GreedyPool” as presented in the source code below:

To set things up, I will create a new specific user that I will call NikoSlow, which will be connecting to the test SQL Server 2016 SP1, under the following circumstances: From the 6 GB of available RAM to the SQL Server instance, I will allow only 5% of the Memory Grant, while cutting down to the 20% of the Maximum Memory available for the configured Resource Pool, as you can see on the picture. I have also set up a classifier function for the Resource Governor, that associates the user “NikoSlow” with the Workload Group “GreedyPool” as presented in the source code below:

CREATE OR ALTER FUNCTION [dbo].[fnUserClassifier]()

RETURNS sysname

WITH SCHEMABINDING

AS

BEGIN

DECLARE @WorkloadGroup AS SYSNAME

IF(SUSER_NAME() = 'NikoSlow')

SET @WorkloadGroup = 'GreedyPool'

ELSE

SET @WorkloadGroup = 'default'

RETURN @WorkloadGroup

END

After establishing a new connection with the user “NikoSlow”, we are ready to create a table that will serve as a test case for our memory pressure Row Groups merge.

DROP TABLE IF EXISTS dbo.MemoryPressure; CREATE TABLE dbo.MemoryPressure( C1 INT NOT NULL, C2 VARCHAR(20) NOT NULL, INDEX CCI_MemoryPressure CLUSTERED COLUMNSTORE );

We are ready to load some data into the dbo.MemoryPressure test table and lets load 1.000.000 rows into it (I have chosen the number of rows to be 1.000.000 specifically, because theoretically it will fit into just 1 Row Group perfectly, avoiding some of the re-compression and merge operations).

SET NOCOUNT ON; INSERT INTO dbo.MemoryPressure WITH (TABLOCK) (c1,c2) SELECT TOP (1000000) i, FORMAT(i,'d10') + FORMAT(i,'d10') FROM ( SELECT ROW_NUMBER() OVER (ORDER BY (SELECT NULL)) as i FROM sys.objects s1 CROSS JOIN sys.objects s2 CROSS JOIN sys.objects s3 ) src;

For observing the details of the Row Groups, I will use the CISL function cstore_GetRowGRoupsDetails with the @tableName parameter set to filter down to the Row Groups of the table “MemoryPressure”

exec dbo.cstore_GetRowGroupsDetails @tableName = 'MemoryPressure', @preciseSearch = 1;

![]() As you can see on the picture above, we have 2 Row Groups that are compressed with just 524289 & 475711 rows in each of the row groups, and the reason for the trimming the size is the Memory Limitation.

As you can see on the picture above, we have 2 Row Groups that are compressed with just 524289 & 475711 rows in each of the row groups, and the reason for the trimming the size is the Memory Limitation.

Here comes a shiny moment of the Mathematics, and allow me to add that you do not need any AI to make the calculation: can any 2 Row Groups of this table be merged ? Doing the incredible process of addition we shall have 1.000.000 rows as a result. This what we expect, but can we get there, given that we are still under memory pressure because of our Resource Governor configuration.

Forcing the Row Group Merge & re-compression at this point, will bring us some changes in the pure size of the Row Groups:

ALTER INDEX CCI_MemoryPressure ON dbo.MemoryPressure REORGANIZE WITH (COMPRESS_ALL_ROW_GROUPS = ON);

But all consecutive executions will bring less changes, because typically there will be a stabilising point where the engine won’t be able to achieve any significant changes, while still not understanding that there is no more reason to try.

![]()

As you can see on the pictures, in this test case, the execution stabilises when we reach 556778 & 443212 rows in the Row Groups respectively. Any further Merge attempts will bring no real changes to the numbers in the Row Groups, unless of course we add some more memory. You might say – that’s fine, this is small work for a small amount of time, but take a leap of faith and imagine a 100 Million Rows table that has memory pressure and is being distributed between good number of Row Groups – this might present some problem for the amount of resources one need to spend on the process that brings no changes.

One can easily point that in such case the maintainer of the instance should be smart enough to do the right thing, because SQL Server is simply attempting what it was asked to do, and that maybe a change in a Cumulative Update will bring some changes in the future. Well, I am going to provide some way for this intelligence for the CISL in the nearest future.

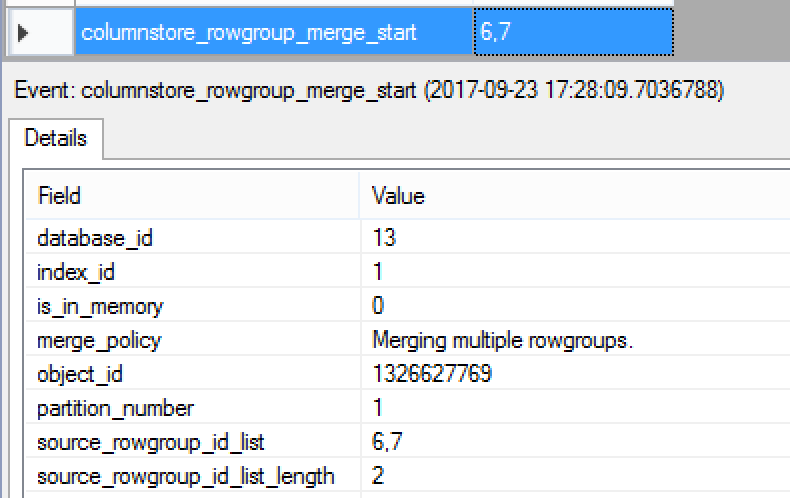

Another important angle of view I wanted to bring through the usage of the Extended Events, where I created the following XE Session with attempt to catch the following events into the Ring Buffer: sqlserver.columnstore_index_reorg_failed, sqlserver.columnstore_no_rowgroup_qualified_for_merge, sqlserver.columnstore_rowgroup_merge_complete, sqlserver.columnstore_rowgroup_merge_failed & sqlserver.columnstore_rowgroup_merge_start.

CREATE EVENT SESSION [Row Group Merge] ON SERVER ADD EVENT sqlserver.columnstore_index_reorg_failed, ADD EVENT sqlserver.columnstore_no_rowgroup_qualified_for_merge, ADD EVENT sqlserver.columnstore_rowgroup_merge_complete, ADD EVENT sqlserver.columnstore_rowgroup_merge_failed, ADD EVENT sqlserver.columnstore_rowgroup_merge_start ADD TARGET package0.ring_buffer GO

Running them against the process of merging stable Columnstore Row Groups, will produce the following result – with the Row Groups 6 & 7 being merged under the sqlserver.columnstore_rowgroup_merge_start event:

The result is very much expectable and predictable, you need to watch that you have enough resources, because as I always say – the Columnstore Indexes are the hungry beasts and they shall consume any resources they can lay their eyes on.

Dictionary Pressure

Let’s take a look at the most evil and uncorrectable pressure within SQL Server for the Columnstore Indexes – the Dictionary Pressure. For any final user without a bit of the internal knowledge of the Row Group sizes, Dictionaries & Pressures, it is always a huge surprise to find out that they can’t have those perfectly sized Row Groups with 1.048.576 rows.

Let us set up a table producing a good Dictionary Pressure and load 1.1 Million Rows into it, expecting to get just 2 Row Groups, while perfectly being aware that the result shall be quite different:

DROP TABLE IF EXISTS dbo.Pressure;

CREATE TABLE dbo.Pressure (

c1 int NOT NULL,

c2 INT NOT NULL,

c3 char(40) NOT NULL,

c4 varchar(800) NOT NULL,

c5 int not null

);

set nocount on

declare @outerloop int = 0

declare @i int = 0

while (@outerloop < 1100000)

begin

Select @i = 0

begin tran

while (@i < 2000)

begin

insert dbo.Pressure values (@i + @outerloop, @i + @outerloop, 'a',

concat (CONVERT(varchar, @i + @outerloop), (replicate ('b', 750)))

,ABS(CHECKSUM(NewId())) % 2000000 )

set @i += 1;

end

commit

set @outerloop = @outerloop + @i

set @i = 0

end

go

-- Create Clustered Columnstore Index

Create Clustered Columnstore Index CCI_Pressure

on dbo.Pressure with (maxdop = 1);

For confirming, let us use the CISL function cstore_getRowGroupsDetails:

exec dbo.cstore_GetRowGroupsDetails @tableName = 'Pressure', @preciseSearch = 1;

A typical Row Group in this test case contains 65546 Rows, that is well beyond the expected maximum number of rows within a Row Group, and the reason as expected is a Dictionary Pressure, that you can see on the right side under the column trim_reason_desc.

A typical Row Group in this test case contains 65546 Rows, that is well beyond the expected maximum number of rows within a Row Group, and the reason as expected is a Dictionary Pressure, that you can see on the right side under the column trim_reason_desc.

This is already looks like a great candidate for the Row Group Merge operation, but let us spice up things just a pinch, by deleting the first 100.000 rows of our table:

DELETE FROM dbo.Pressure WHERE C1 <= 100000;

The current situation of the Row Groups is to be seen on the screenshot below, while using the same command as before:

exec dbo.cstore_GetRowGroupsDetails @tableName = 'Pressure', @preciseSearch = 1;

We are definitely ready to merge a couple of Row Groups, or at least both of the Row Groups with the deleted rows (ids 1 & 2) should at least do Self-Merge and Auto-Elimination respectively, right?

Let us force it with the ALTER INDEX ... REORGANIZE command:

ALTER INDEX CCI_Pressure ON dbo.Pressure REORGANIZE;

The above statement takes milliseconds to execute and the reason behind this is that nothing shall happen to our table. For some internal algorithm reasons, the Query Optimiser shall not apply any changes to those tables, even it absolutely obvious that it should in the cases of the Row Groups with ids 1 & 2, because the re-compression of the less amount of data will result is to be expected at least equally successfully as the complete one without deleted rows, and the fully deleted Row Group should be definitely removed anyway.

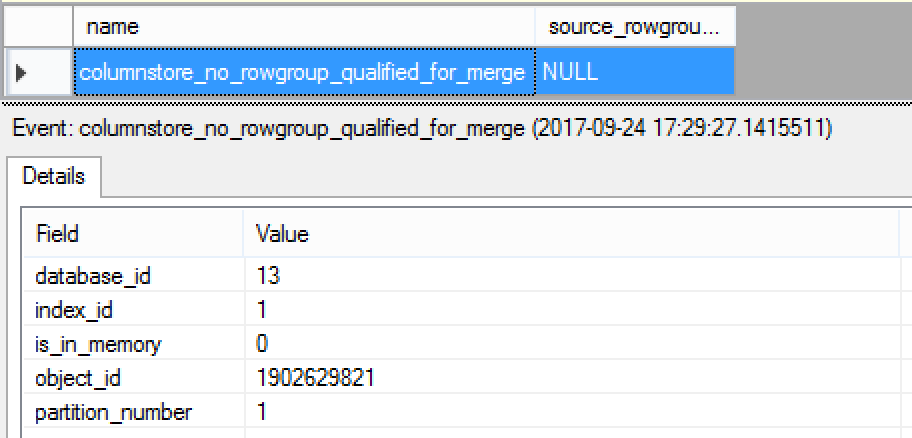

Consulting the Extended Events session that we have created already for the Memory Pressure scenario shall give us confirmation that no action was taken:

We have caught the columnstore_no_rowgroup_qualified_for_merge event, meaning that the Query Optimiser decided not to advance with Row Group merge.

It is very interesting to notice that the Query Optimiser choose will affect only the Row Groups that are suffering under Dictionary Pressure, meaning that the regular Row Groups that were not trimmed with this kind of pressure will be subject to compress under the regular conditions.

For this purpose, let us change our destination table just a little bit, by altering the column types for the text ones to NULL:

ALTER TABLE dbo.Pressure ALTER COLUMN c3 char(40) NULL; ALTER TABLE dbo.Pressure ALTER COLUMN c4 varchar(800) NULL;

And load 100.000 rows into a Delta-Store with 2 following loads of 150.000 rows into compressed Row Groups that shall become candidates for a successful Row Group Merge operation:

INSERT INTO dbo.Pressure (c1,c2,c5) SELECT TOP (100000) i, i, i FROM ( SELECT ROW_NUMBER() OVER (ORDER BY (SELECT NULL)) as i FROM sys.objects s1 CROSS JOIN sys.objects s2 CROSS JOIN sys.objects s3 ) src; INSERT INTO dbo.Pressure (c1,c2,c5) SELECT TOP (150000) i, i, i FROM ( SELECT ROW_NUMBER() OVER (ORDER BY (SELECT NULL)) as i FROM sys.objects s1 CROSS JOIN sys.objects s2 CROSS JOIN sys.objects s3 ) src; INSERT INTO dbo.Pressure (c1,c2,c5) SELECT TOP (150000) i, i, i FROM ( SELECT ROW_NUMBER() OVER (ORDER BY (SELECT NULL)) as i FROM sys.objects s1 CROSS JOIN sys.objects s2 CROSS JOIN sys.objects s3 ) src;

Looking at the added Row Groups will give you a similar picture:

![]()

If we execute the ALTER INDEX REORGANIZE command, forcing the Row Group Merge:

ALTER INDEX CCI_Pressure ON dbo.Pressure REORGANIZE;

We shall have the following picture after successful compression of the 2 added Row Groups plus one residual Row Group (id = 16), while the process shall keep on avoiding the so much needed recompression of the fragmented & fully deleted Row Groups:

exec dbo.cstore_GetRowGroupsDetails @tableName = 'Pressure', @preciseSearch = 1;

Final Thoughts

Bad stuff happens, but the above presented problems have a healthy amount of reason, because the pressures (Memory & Dictionary) do not disappear and are still present even if we are not building but Merging Row Groups. In reality, I would expect that Merging would require even more memory, because of all the overhead from reading multiple Row Groups involved.

SQL Server 2017

A similar situation can be found within SQL Server 2017 (the difference is that the fully deleted Row Groups will be found and removed - is this fix coming over to SQL Server 2016?).

Overall I think that these limitations are good candidates for be fixed in the vNext version after the SQL Server 2017, but first (and very, very first :)), one should finally start fixing the situation with Dictionary Pressure where we almost have no solution right now.

In the mean time, times and over again, I want to stress - do not rely completely on ALTER INDEX REORGANIZE command alone when doing maintenance of the Columnstore Indexes, you shall avoid a number of huge problems, such as unremovable logical fragmentation, as I have shown in this blog post for the Dictionary Pressure.

to be continued with Columnstore Indexes – part 114 (“Machine Learning Services”)

So in the dictionary pressure case where sufficient number of records are deleted, we should rebuild index. Is it correct way please?

Do new versions show the same behavior still?

Hi Manish,

Yes, what you have written is correct.

The behaviour is still the same – the Dictionary Pressure still does not have an internal solution.

Best regards,

Niko Neugebauer

One difference I saw is for memory pressure trimmed row groups, auto merge, did do proper merging in SQL version 1.XX unlike the dictionary pressure.

Well, that sounds that your Row Groups were still under memory pressure, probably.

Did you check the Extended Events ?

Best regards,

Niko